About Us

MWR is an independent cyber security consultancy with research at the core of what we do. Solving our MEA clients’ unique cyber security challenges and ensuring they are more resilient to cyber-attacks is why we exist and continue to exist.

MWR is renowned for its technical excellence and client-centric approach to solving cyber security challenges. From partnering with some of Africa’s most renowned financial institutions, to advising large telecommunications and media corporations, our reputation of trust speaks for itself.

MWR works alongside organisations and their requirements to develop a comprehensive understanding of strategic needs. This approach allows us to provide security solutions that empower our clients, rather than restrict them.

Headquartered in Johannesburg South Africa, MWR services clients across Africa and The Middle East. The focus on these regions and their unique cyber security challenges allows us to ensure that our service offering and research initiatives are tailored to our target market.

We’ll never just fix the symptoms of a security issue, but will take the time to look for the root cause. Our integrity is fundamental to everything we do – we hate hype, abhor buzzwords and place an enormous emphasis on the outcomes our clients receive.

We believe that being at the forefront of research drives the high level of service MWR offers. Our ability to invest time into new endeavours keeps our consultants on the leading edge of security and gives us a unique insight into the mind of the attacker.

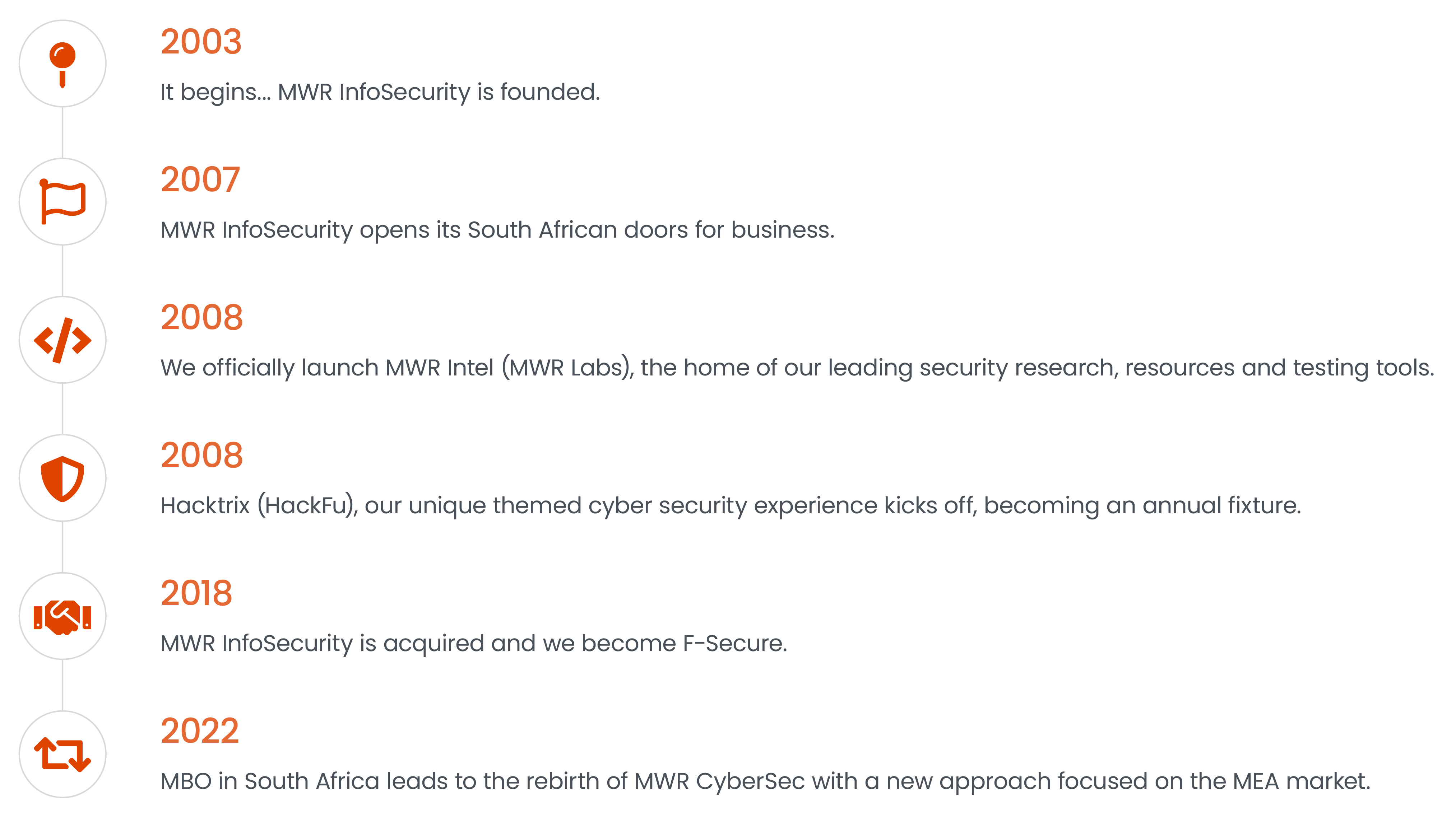

Our History

MWR’s history has sharpened our ability to protect our clients’ future.

Our Research

MWR has a dedicated commitment to research, with our technical consultants devoting time to conducting security research relevant to the industry and our clients. Whether the time is used to investigate new software, hardware, or protocols, we encourage our team to push the boundaries of what’s possible.

Since our inception, MWR’s research has won numerous industry awards, been presented at top-tier security conferences and gained worldwide press coverage. But most important of all, our research goes into supporting the high level of technical expertise available to our clients.

For example, by taking the time to break the latest smartphone, our consultants will have a deeper understanding of the device and therefore the methods a skilled attacker could use to find their way in. Along with the personal development this offers our people, the knowledge gained gives MWR the ability to secure our clients to the highest possible standard.

Our People

Being able to solve complex cyber security problems requires unique skillsets.

MWR consultants combine a passion for security with technical excellence and so we actively search for, and employ those that share that ethos. We love hacking things and solving problems at MWR. If this wasn’t our job, we’d be doing it as a hobby.

In addition to the above, communication is a vital quality for MWR consultants. It is essential to us that we are able to conduct assessments to find security vulnerabilities in systems and networks, but equally important is our ability to be able to effectively communicate these findings to our clients, at a level that is relevant to the audience. We aim to ensure that vulnerabilities and our recommendations are fully understood and communicated with the right level of context for the environment and organisation that we are working with.

Service Offering

Our specialist security solutions are delivered via our dedicated service areas. Each specialises in a different aspect of information security, providing a range of solutions tailored to the cyber security challenges that organisations currently face.

Industries

MWR applies our cutting-edge cyber security expertise and solutions across a range of industries.

Every day we deliver protection and peace of mind to clients who find themselves facing different cyber security challenges.

Our deep understanding of specific industry needs allows us to develop targeted solutions to address these challenges.

Training

MWR’s cyber security training courses are distinctive in letting you see your network and applications from the attacker’s viewpoint. Understanding the attacker mindset equips you to make the right decisions about the security of your own systems and applications.

Learn from the experts

Our consultants are experienced cyber security professionals who deal with penetration testing and security assessments every day. They deliver training that is highly relevant and up to date.

Hands-on practice

Our courses place the emphasis on practice, packed with hands-on exercises dealing with realistic applications and networks; all modeled on what MWR has observed in real-world penetration tests.

Cutting-edge knowledge

Our upgraded course syllabus and remodeled labs deliver a complete infrastructure of real-life applications and programs to immerse you in the realities of attack and defence.

Courses

Careers

Looking at getting into cyber security?